~/projects/

Optimization-inspired dictionary and deep learning

This research uses a process called deep unfolding to show that associated with any mechanistic/generative/statistical model from a large class is a neural network architecture that, when trained, infers the quantities of interest in the model. Stated otherwise, starting from a mechanistic model, deep unfolding lets one design a neural network architecture specifically tailored to the model. On the one hand, the connection to mechanistic models lets us interpret neural networks and enables a theoretical study of their properties, via a study of the properties of the mechanistic model associated with a given architecture. On the other hand, the connection to neural networks lets us leverage GPUs, and the computational infrastructure that has been developed to train neural networks, to solve inference and estimation problems that rely on mechanistic models of data.

Sparse and dense models

In signal processing images are frequently thought of being decomposable into a dense, or low-frequency, and a sparse, or high-frequency, component, possibly at different levels of granularity. Based on this observation, we design, experiment on, and provide proofs for a new computational model and a corresponding deep-learning architecture. This sparse and dense model is able to accurately recover both the sparse and dense components, and the deep-learning architecture exhibits a similar performance.

Relevant papers

[1] Tasissa A., Theodosis E., Tolooshams B., Ba D. "Dense and sparse coding: theory and

architectures", arXiv, 2020.

[2] Zazo J., Tolooshams B., Ba D. "Convolutional dictionary learning in hierarchical networks",

IEEE International Workshop on Computational Advances in Multi-Sensor Adaptive

Processing, 2019.

Main researchers: Abiy Tasissa, Manos Theodosis, Javier Zazo

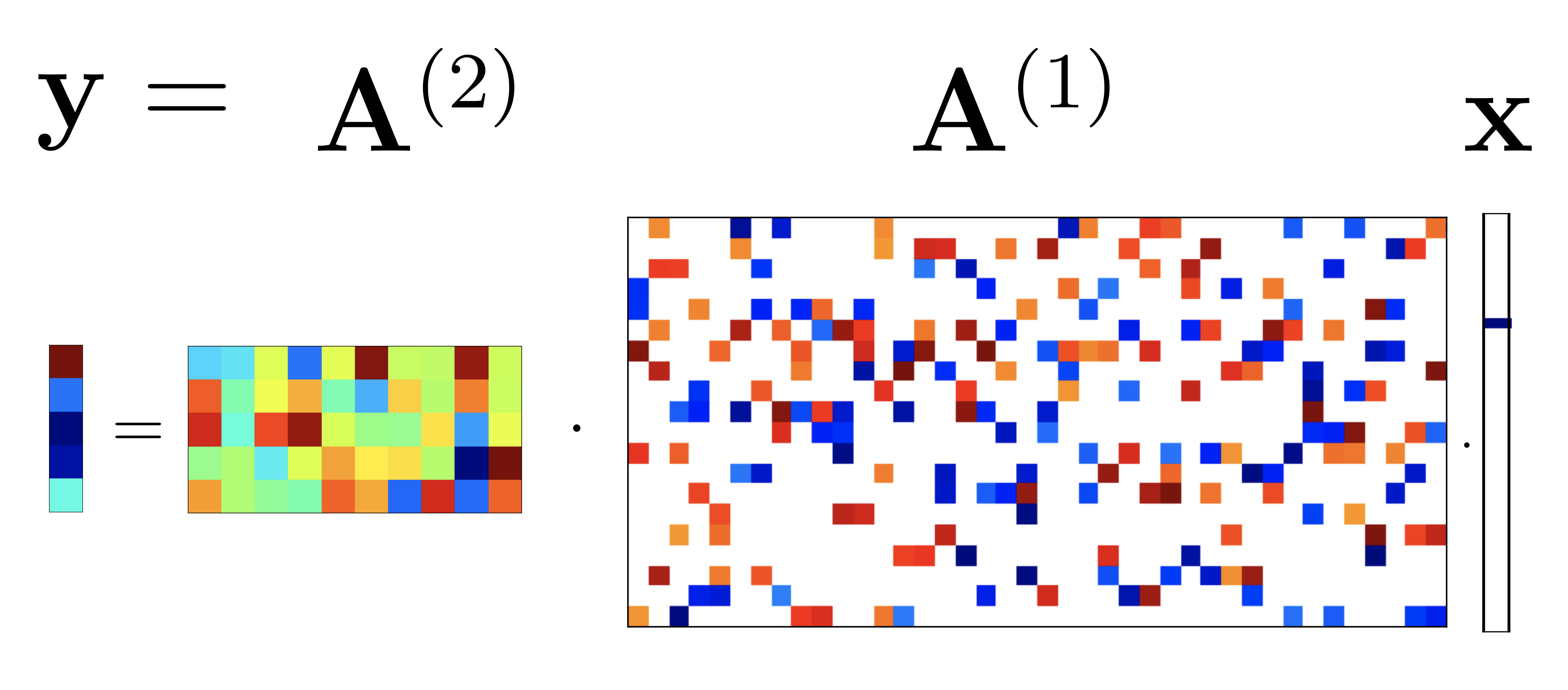

Deeply-sparse signal representations

A recent line of work shows that a deep neural network with ReLU nonlinearities arises from a finite sequence of cascaded sparse-coding models, the outputs of which, except for the last element in the cascade, are sparse and unobservable. We show that if the measurement matrices in the cascaded sparse-coding model satisfy certain regularity conditions they can be recovered with high probability. The method of choice in deep learning to solve this problem is by training an auto-encoder. Our algorithms provide a sound alternative, with theoretical guarantees, as well upper bounds on sample complexity. The theory 1) relates the number of examples needed to learn the dictionaries to the number of hidden units at the deepest layer and the number of active neurons at that layer (sparsity), 2) relates the number of hidden units in successive layers, thus giving a practical prescription for designing deep ReLU neural networks, and 3) gives some insight as to why deep networks require more data to train than shallow ones.

Relevant papers

[1] Ba D. "Deeply-sparse signal representations", IEEE Transactions on Signal Processing,

2020.

Main researchers: Demba Ba

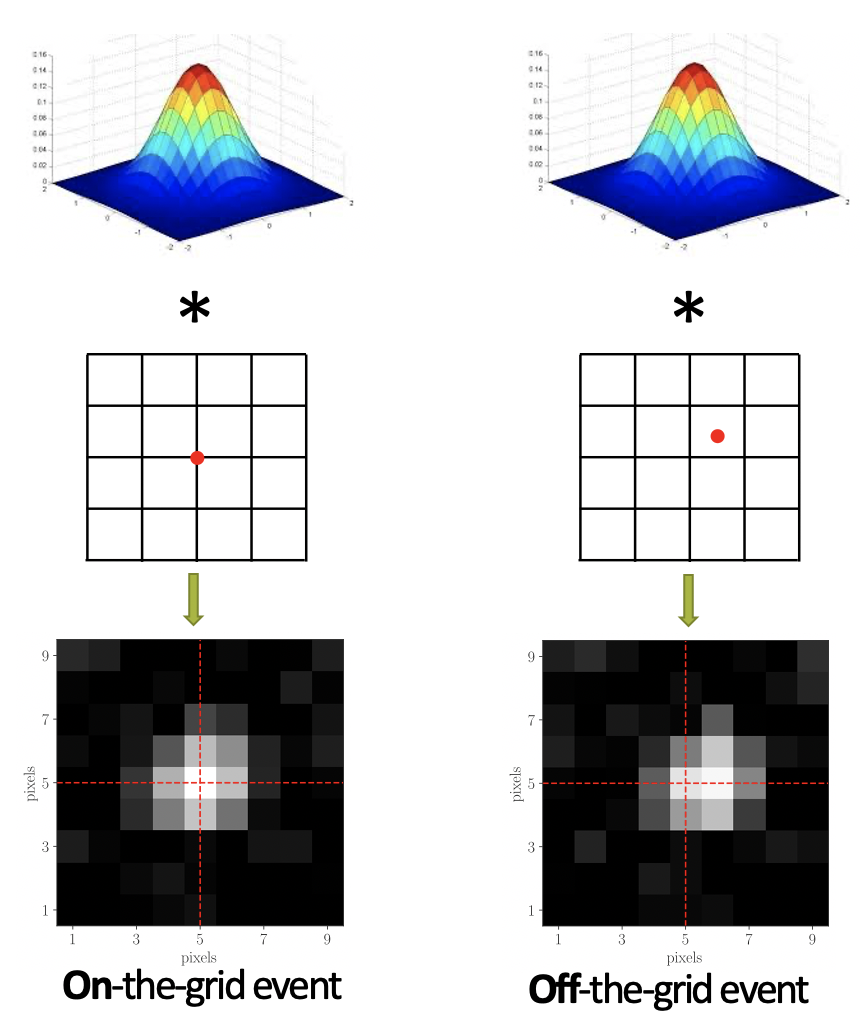

Smoothness-constrained dictionary learning

Many real-world applications, especially the biological data from electrophysiology or super-resolution microscopy experiments, can be decomposed into superposition of smooth, localized dictionary elements. By incorporating the smoothness constraint explicitly into our dictionary learning optimization procedure, we are able to 1) learn more accurate dictionary elements and 2) perform super-resolve temporal/spatial uncertainty.

Relevant papers

[1] Song A., Flores F., Ba D. "Convolutional dictionary learning with grid refinement", IEEE

Transactions on Signal Processing, 2020.

Main researchers: Andrew Song

Learning behaviour of exponential autoencoders

We introduce a deep neural network called deep convolutional exponential autoencoder (DCEA) adapted to various data types such as count, binary, or continuous data. The network architecture is inspired from convolutional dictionary learning, the problem of learning filters that entail locally sparse representations of the data. When the data is continuous and corrupted by Gaussian noise, DCEA is reduced to constrained recurrent sparse autoencoder (CRsAE). DCEA is one of the state-of-the-arts in low count Poisson image denoising. In addition, compared to optimization-based methods, CRsAE and DCEA accelerates dictionary learning as a result of implicit acceleration caused by backpropagation and its deployment on GPU. Besides, we study the learning behaviour of DCEA during training.

Relevant papers

[1] Tolooshams B.†, Song A.†, Temereanca S.,

Ba D. "Convolutional dictionary learning based auto-encoders for natural exponential-family

distributions", International Conference on Machine Learning, 2020.

Main researchers: Bahareh Tolooshams, Andrew Song

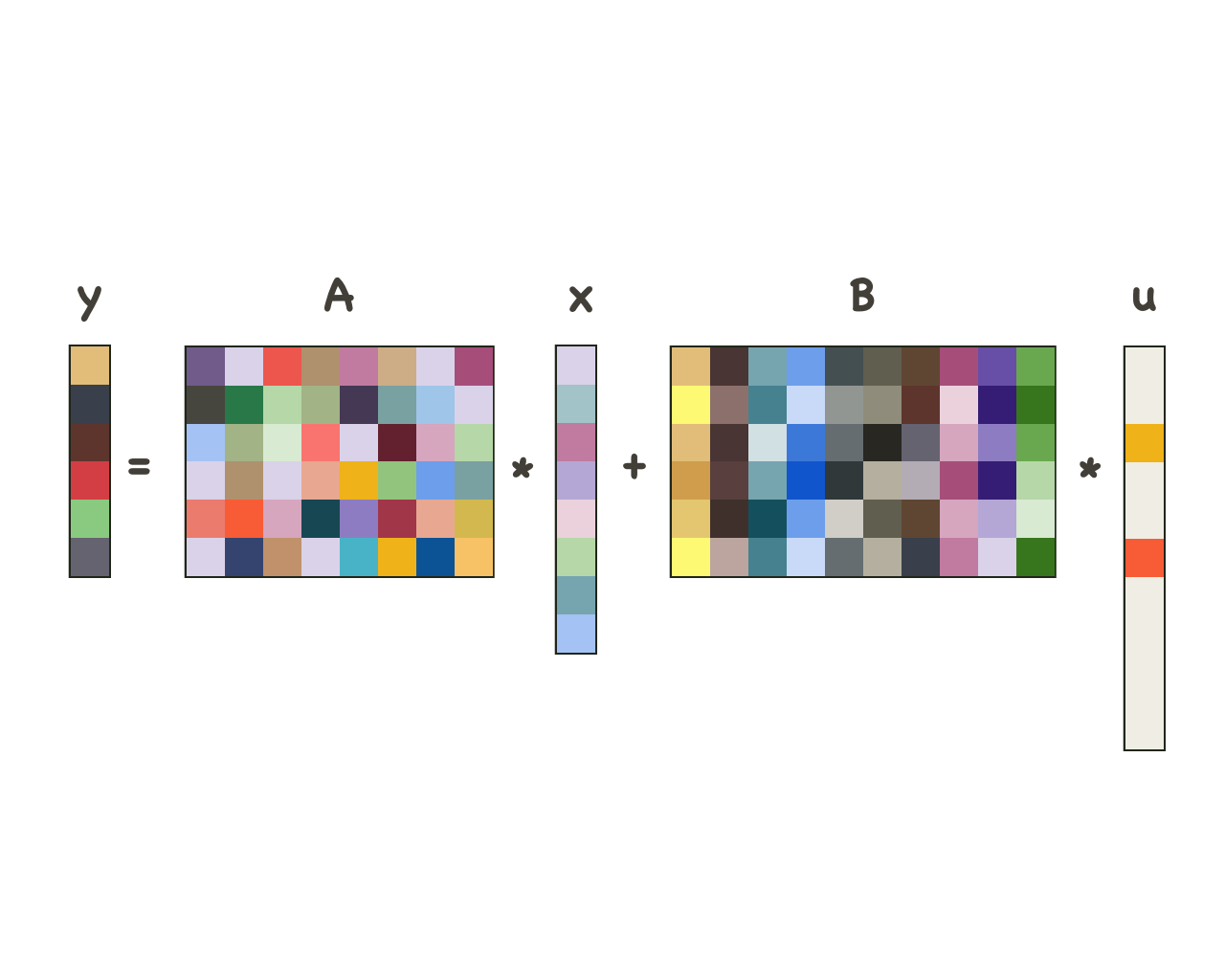

Model-based autoencoders for dictionary learning

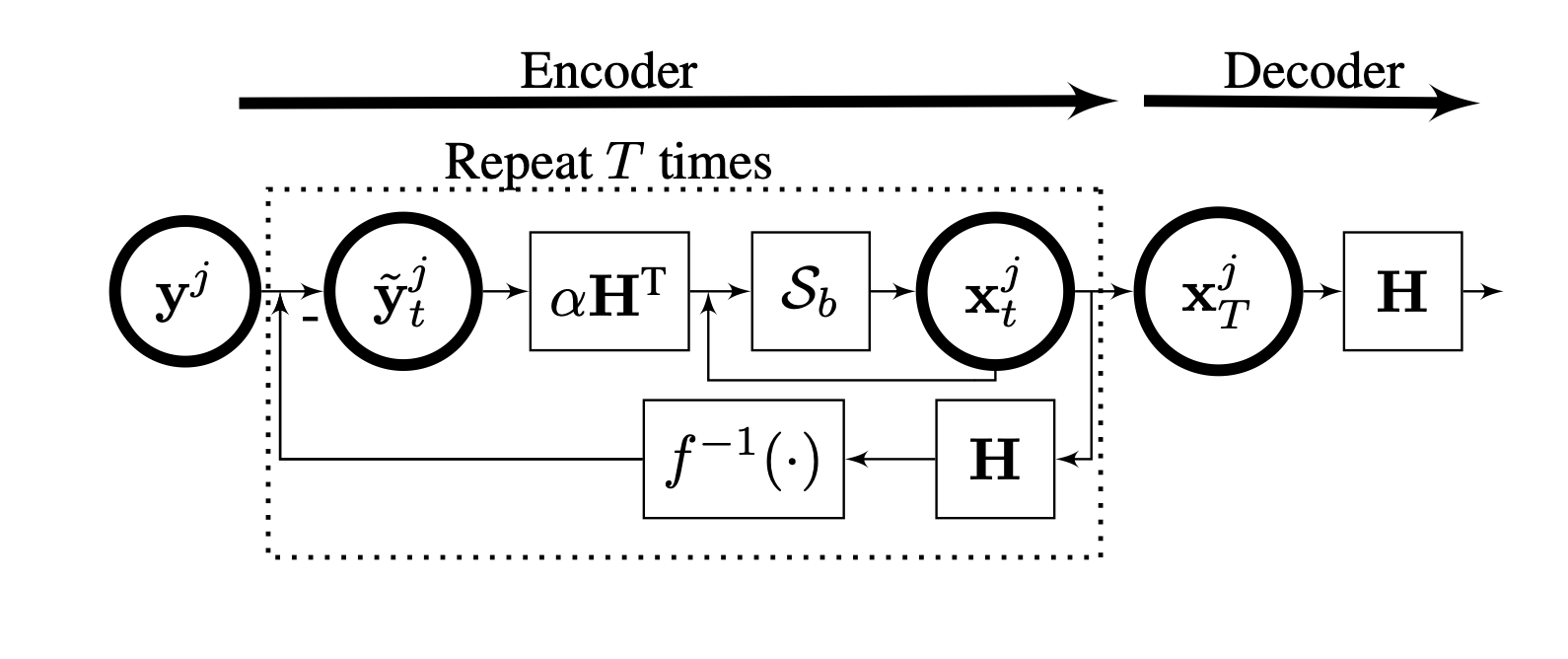

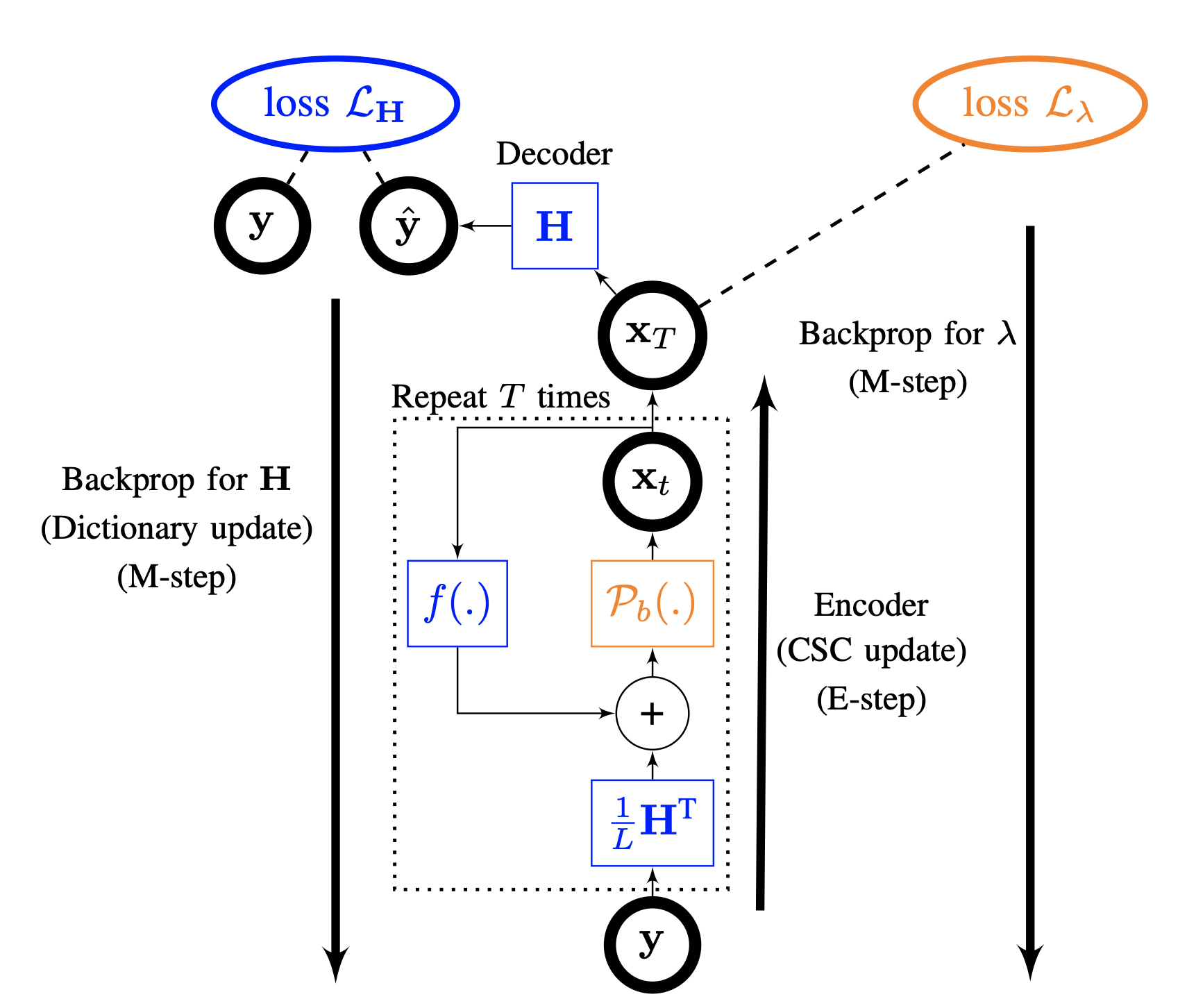

We introduce constrained recurrent sparse autoencoder (CRsAE) based on dictionary learning. The encoder maps the data into a sparse representation and the decoder reconstructs the data using a dictionary. In cases where you only have access to a compressed version of the data, the network is named RandNet. RandNet tries to recover data from its compressed measurements using sparsity prior.

Relevant papers

[1] Tolooshams B., Dey S., Ba D. "Deep residual auto-encoders for expectation

maximization-inspired dictionary learning", IEEE Transactions on Neural Networks and Learning

Systems, 2020.

[2] Chang T., Tolooshams B., Ba D. "Randnet: deep learning with compressed measurements of

images", International Workshop on Machine Learning for Signal Processing, 2019.

[3] Tolooshams B., Dey S., Ba D. "Scalable convolutional dictionary learning with constrained

reccurent sparse auto-encoders", International Workshop on Machine Learning for Signal

Processing, 2018.

Main researchers: Bahareh Tolooshams

Computational Neuroscience

The brain is a high-dimensional dynamical system that exhibits intricate dynamics at multiple spatial and temporal scales. As such, sophisticated data acquisition modalities-such as EEG, MEG, fMRI, calcium imaging and electrophysiology, to name a few-and behavioral experimental paradigms have been developed over the years to probe the dynamics of the brain at heterogeneous spatial and temporal scales. Two examples of the multiscale nature of neural activity come from electrophysiology and behavioral experiments. In electrophysiology, the spiking dynamics of neurons can exhibit variability both within a given trial (fast time scale) and across trials (fast time scale). In behavioral experiments, learning can involve dynamics within the confine of a session (fast time scale) and across sessions (slow time scale). State-of-the-art methods for analyzing neural data neglect their inherent multiscale nature. My group develops efficient algorithms for estimation, inference and fusion in multiscale stochastic process models, with applications primarily (but not limited) to neuroscience.

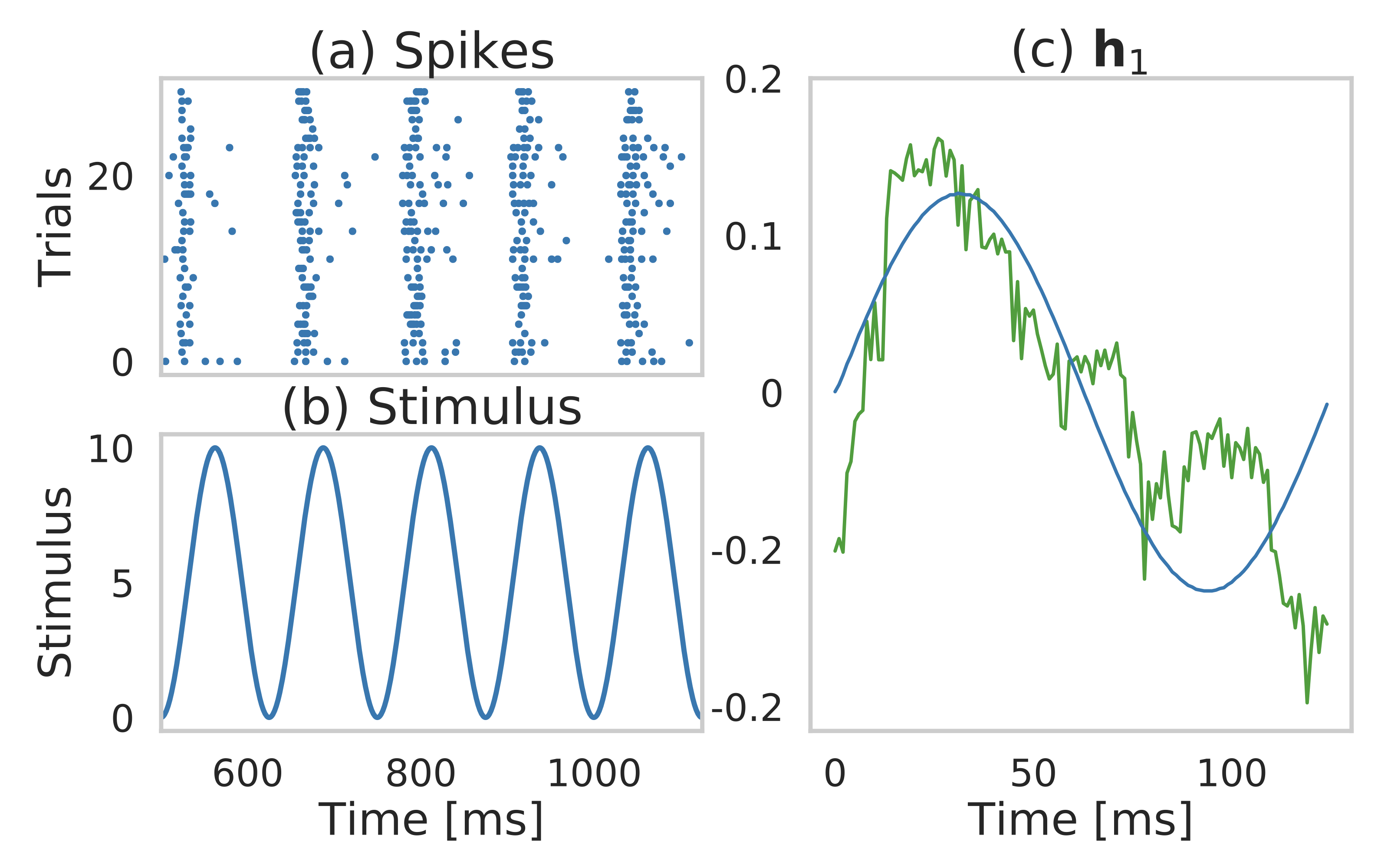

Learning stimuli via dictionary learning

The recording of neural activity in response to repeated presentations of an external stimulus is an established experimental paradigm in the field of neuroscience. Generalized Linear Models are commonly used to describe how neurons encode external stimuli, by statistically characterizing the relationship between the covariates (the stimuli or their derived features) and neural activity. An important question becomes: how to choose appropriate covariates? We propose a data-driven answer to this question that learns the covariates from the neural spiking data, i.e. in an unsupervised manner, and requires minimal user intervention. We use neural spiking data recorded from the Barrel cortex of mice in response to periodic whisker deflections to obtain a data-driven estimate of the covariates that modulate whisker motion.

Relevant papers

[1] Tolooshams B.†, Song A.†, Temereanca S.,

Ba D. "Auto-encoders for natural exponential-family dictionary learning", International

Conference on Machine Learning, 2020.

[2] Song A. †, Tolooshams B.†, Temereanca S.,

Ba D. "Convolutional dictionary learning of stimulus from spiking data", Computational and

Systems Neuroscience, 2020.

Main researchers: Bahareh Tolooshams, Andrew Song

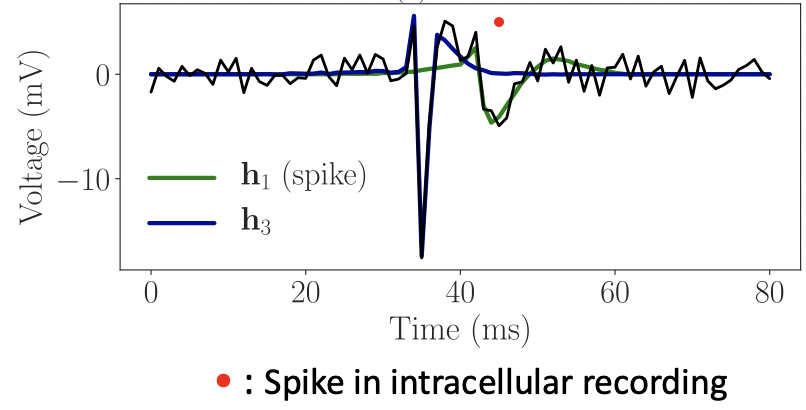

Spike sorting using dictionary learning

Decades of research in experimental neuroscience suggest that neurons communicate through spikes, which emphasizes the importance of accurate spike sorting from raw electrophysiology data. In these projects, we cast the spike sorting problem as a convolutional dictionary learning problem and suggest 1) optimization-based 2) autoencoder-based approaches. We demonstrate that not only these frameworks yield more accurate results compared to the baselines, but also are much more computationally efficient.

Relevant papers

[1] Song A., Flores F., Ba D. "Convolutional dictionary learning with grid refinement", IEEE

Transactions on Signal Processing, 2020.

[2] Tolooshams B., Dey S., Ba D. "Deep residual auto-encoders for expectation

maximization-inspired dictionary learning", IEEE Transactions on Neural Networks and Learning

Systems, 2020.

Main researchers: Andrew Song, Bahareh Tolooshams

Clustering neural spiking data

Neural spiking data are an important source of information for understanding the brain. Analyzing the collective behavior of large assemblies of neurons brings further insight on how knowledge is acquired by humans and animals alike. In response to an external stimulus, sets of neurons often exhibit grouped response patterns. The identity of these neural groups -- as well as the highly nonlinear dynamics of their firing trajectories -- may inform us about how complicated emotions such as fear are encoded at the inter-neuronal level. From a machine learning perspective, we tackle this problem by viewing it as an instantiation of (nonlinear) time series clustering. We develop a general statistical framework and apply its methodology to answering a host of inferential questions in the neuroscience domain.

Relevant papers

[1]Lin A., Zhang Y., Heng J., Allsop S., Tye K., Jacob P., Ba D. "Clustering time series with

nonlinear dynamics: a bayesian non-parametric and particle-based approach", International

Conference on Artificial Intelligence and Statistics, 2019.

Main researchers: Alex Lin

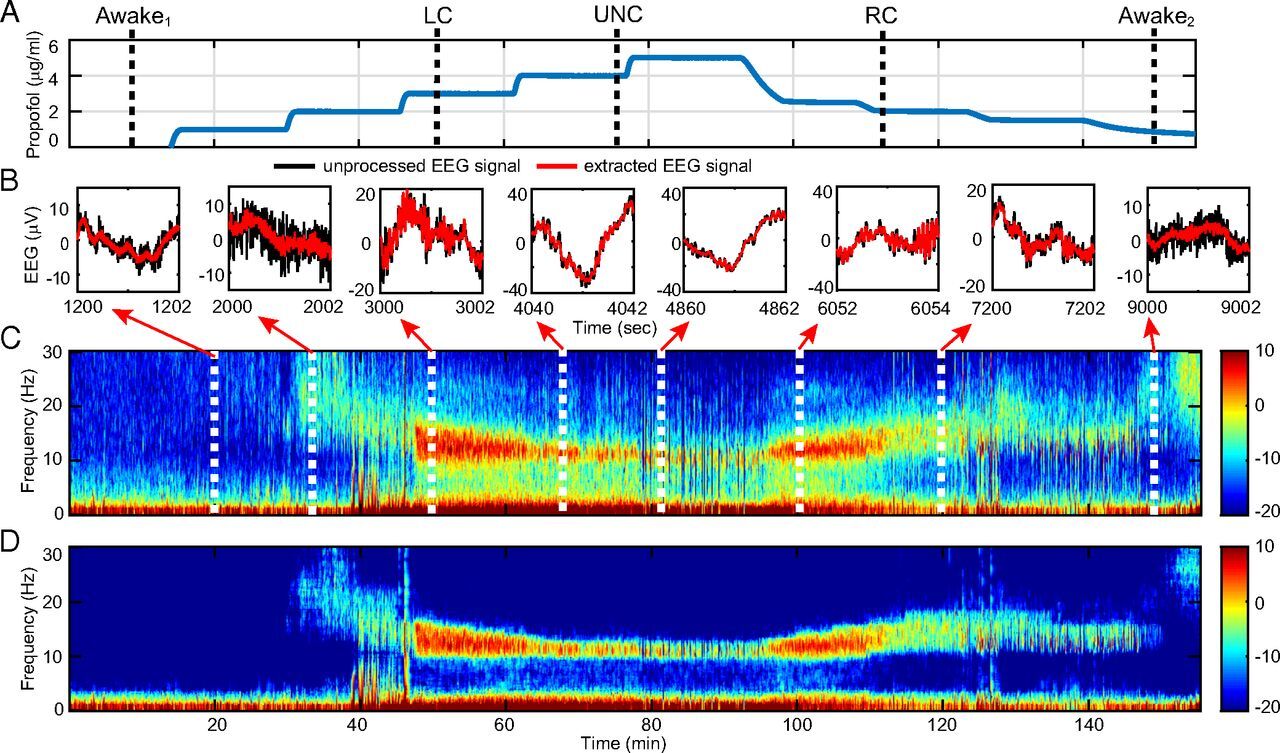

State-space multitaper time-frequency analysis

Rapid growth in sensor and recording technologies is spurring rapid growth in time series data. Nonstationary and oscillatory structure in time series is commonly analyzed using time-varying spectral methods. These widely used techniques lack a statistical inference framework applicable to the entire time series. We develop a state-space multitaper (SS-MT) framework for time-varying spectral analysis of nonstationary time series. We efficiently implement the SS-MT spectrogram estimation algorithm in the frequency domain as parallel 1D complex Kalman filters. In analyses of human EEGs recorded under general anesthesia, the SS-MT paradigm provides enhanced denoising (>10 dB) and spectral resolution relative to standard multitaper methods, a flexible time-domain decomposition of the time series, and a broadly applicable, empirical Bayes’ framework for statistical inference.

Relevant papers

[1] Kim S-E., Behr M., Ba D., Brown E. "State-space multitaper time-frequency analysis",

Proceedings of the National Academy of Sciences, 2018.

Main researchers: Demba Ba

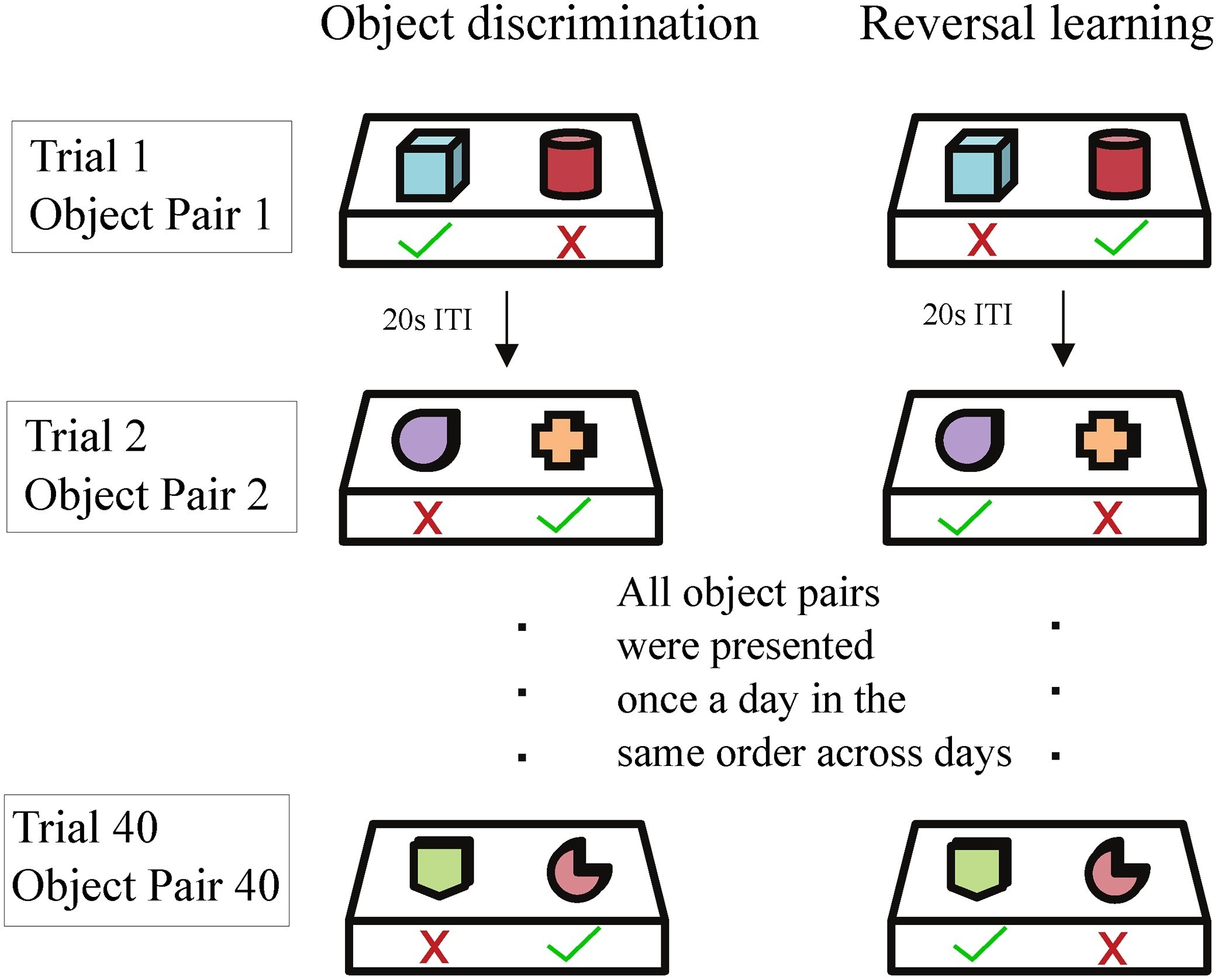

Teaching new tricks

The study of learning in populations of subjects can provide insights into the changes that occur in the brain with aging, drug intervention, and psychiatric disease. Obtaining objective measures of behavioral changes is challenging since learning is dynamic, varies between individuals, and observations are frequently binary. We introduce a method for analyzing binary response data acquired during the learning of object-reward associations across multiple days. The method can quantify the variability of performance within a day and across days, and can capture abrupt changes in learning that would occur, for instance, due to reversal in the reward. The backbone of the method is a separable two-dimensional random field model for binary response data from multi-day behavioral experiments. We use data from young and aged female macaque monkeys performing a reversal-learning task that the model is well-suited for discriminating between-group differences and identifying subgroups.

Relevant papers

[1] Malem-Shinitski N., Zhang Y., Gray D., Burke S., Smith A., Barnes C., Ba D. "A separable

two-dimensional random field model of binary response data from multi-day behavioral

experiments", Journal of Neuroscience Methods, 2018.

[2] Malem-Shinitski N., Zhang Y., Gray D., Burke S., Smith A., Barnes C., Ba D. "Can you teach

an old monkey a new trick?", Computational and Systems Neuroscience, 2017.

Main researchers: Diana Zhang

Estimating a separably-Markov random field

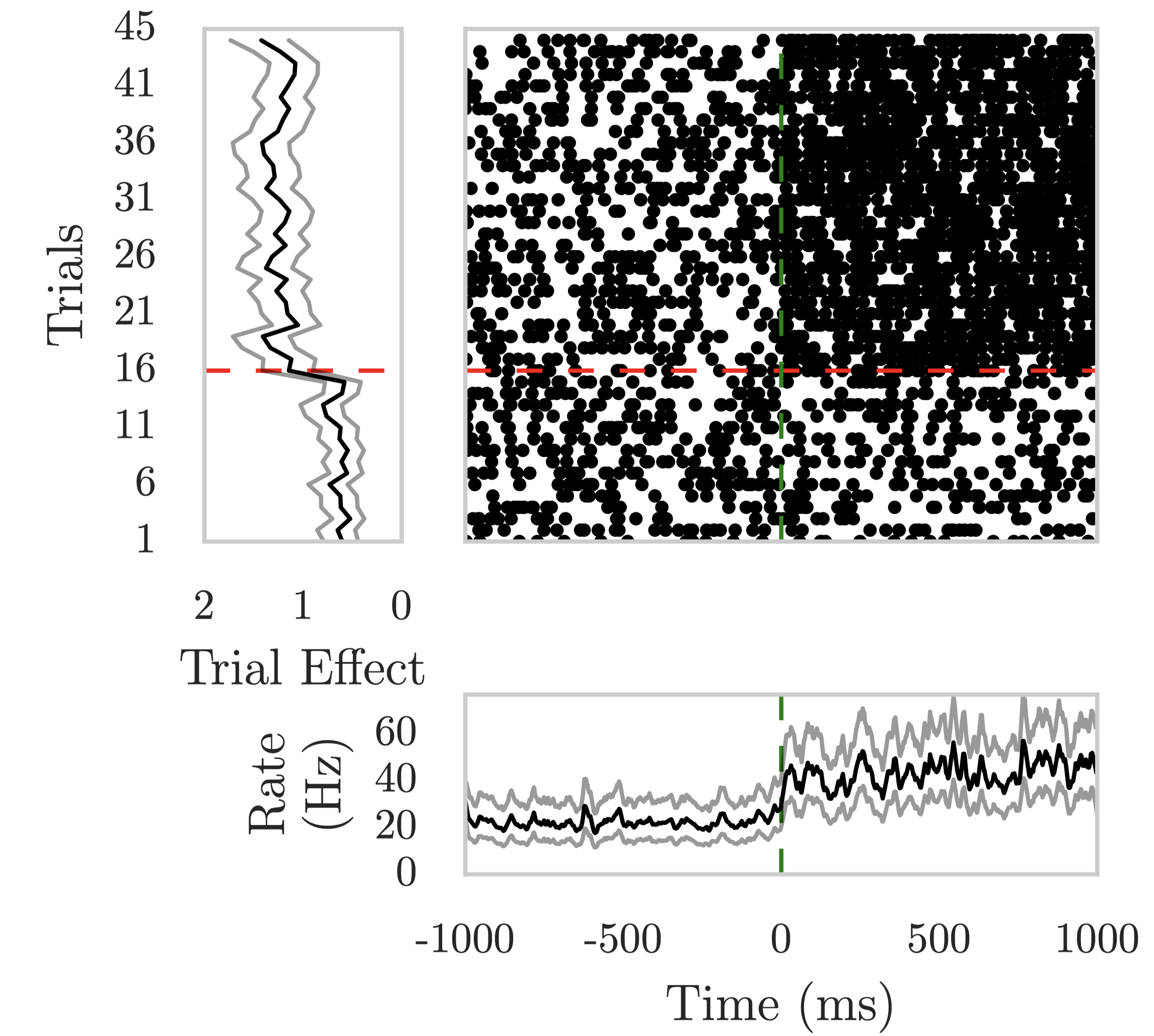

A fundamental problem in neuroscience is to characterize the dynamics of spiking from the neurons in a circuit that is involved in learning about a stimulus or a contingency. Classical methods to analyze neural spiking data collapse neural activity overtime or trials, which may cause the loss of information pertinent to understanding the function of a neuron or circuit. We introduce a new method that can determine not only the trial-to-trial dynamics that accompany the learning of a contingency by a neuron, but also the latency of this learning with respect to the onset of a conditioned stimulus. The backbone of the method is a separable two-dimensional random field model of neural spike raster. We develop efficient statistical and computational tools to estimate the parameters of the separable 2D RF model. We apply these to data collected from neurons in the prefrontal cortex in an experiment designed to characterize the neural underpinnings of the associativelearning of fear in mice.

Relevant papers

[1] Zhang Y., Malem-Shinitski N., Allsop S., Tye K., Ba D. "Estimating a separably-Markov random

field (SMuRF) from binary observations", Neural Computation, 2018.

[2] Zhang Y., Malem-Shinitski N., Allsop S., Tye K., Ba D. "A two-dimensional seperable random

field model of within and cross-trial neural spiking dynamics", Computational and Systems

Neuroscience, 2017.

Main researchers: Diana Zhang